Nonlinear Functions & Optimization

A function is non-linear if it is curved, i.e. not a straight line. Nonlinear functions’ slopes may be different for different values of the independent variable.

The slope at any particular point of the function is is its first derivative, the rate of instantaneous change.

- Equivalently in practice, the value of

Most applications in economics pertain to marginal magnitudes

- Slopes mean change, and the margin implies a small change

- Often describe the rate of substitution between two goods (how much

- Often describe the rate of substitution between two goods (how much

- At the limit, marginal magnitudes are derivatives of a total magnitude

- e.g. Marginal cost (or revenue) is the derivative of Total Cost (or revenue) (and its slope at each value)

- e.g. Marginal product is the derivative of Total Product (and its slope at each value)

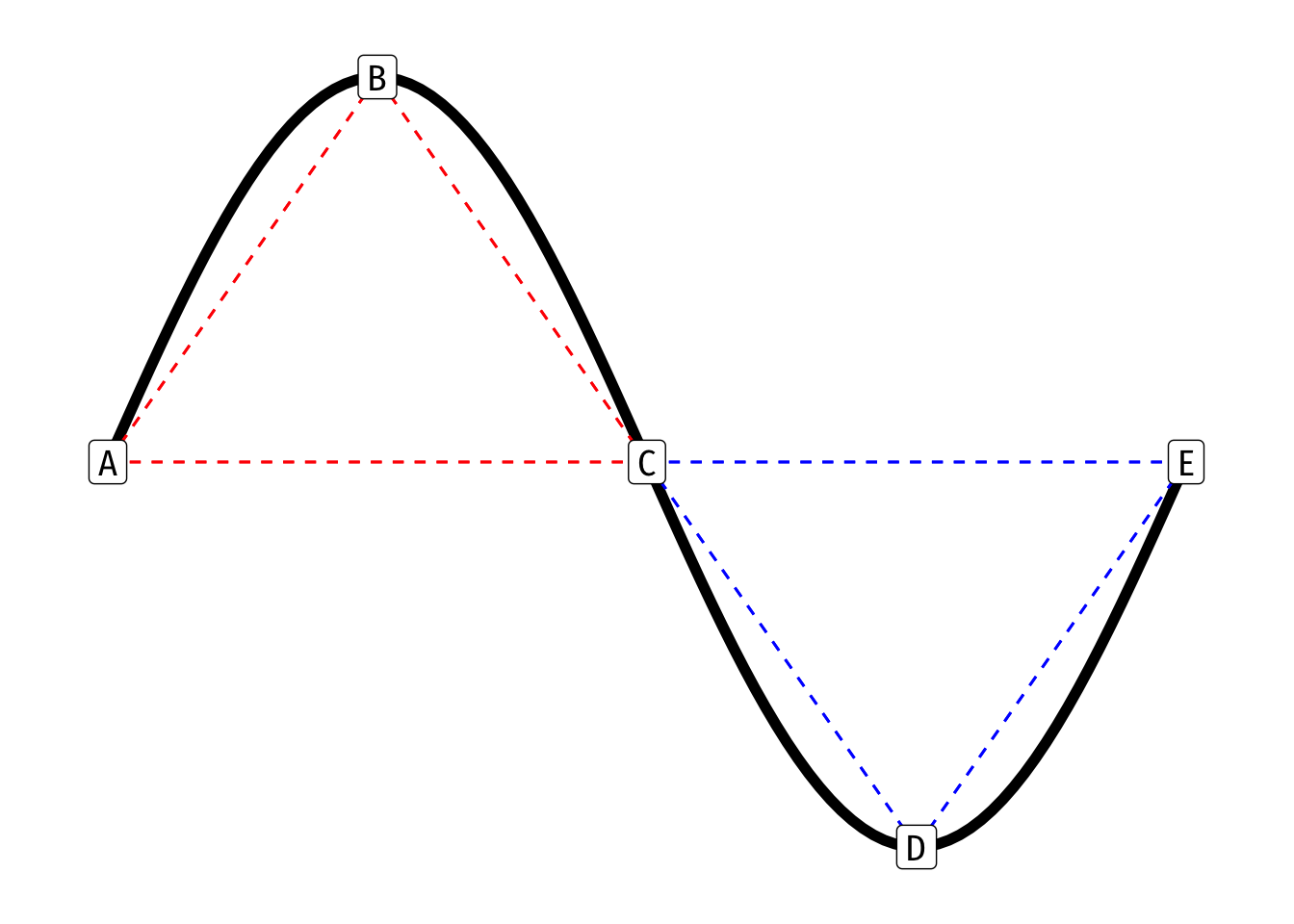

We can describe a curved function as being either convex or concave with respect to the origin (0,0)

In simplest terms, a function is concave between two points

- The above formula is a weighted average (for any set of weights

- The weighted average (dotted line) of

- A function is also concave at a point if its second derivative at that point is negative

In simplest terms, a function is convex between two points

A function switches between convex and concave at an inflection point (point C in the example above) - Here, the second derivative (in addition to the first) is equal to 0

Optimization

For most curves, we often want to find the value where the function reaches its maximum or minimum (in general, these are types of “extrema”) along some interval

Formally, a function reaches a maximum at

The maximum or minimum of a function occurs where the slope (first derivative) is zero, known in calculus as the “first-order condition”

- To distinguish between maxima and minima, we have the “second-order condition”

- A minimum occurs when the second derivative of the function is positive, and the curve is convex

- A maximum occurs when the second derivative of the function is negative, and the curve is concave

- An inflection point occurs where the second derivative of the function is zero

- All three are known as “critical points”

- A minimum occurs when the second derivative of the function is positive, and the curve is convex

This is often useful for unconstrained optimization, e.g. finding the quantity of output that maximizes profits

Note, if we have a multivariate function

Often we want to find the maximum or minimum of a function over some restricted values of

- We want to find the maximum of some function:

Much of microeconomic modeling is about figuring out what an agent’s objective is (e.g. maximize profits, maximize utility, minimize costs) and what their constraints are (e.g. budget, time, output).

There are several ways to solve a constrained optimization problem (see Appendix to Ch. 5 in textbook), the most frequent (but requiring calculus) is Lagrangian multiplier method.

Graphically, the solution to a constrained optimization problem is the point where a curve (objective function) and a line (constraint) are tangent to one another: they just touch, but do not intersect (e.g. at point A below).

At the point of tangency (A), the slope of the curve (objective function) is equal to the slope of the line (constraint)

- This is extremely useful and is always the solution to simple constrained optimization problems, e.g.

- e.g. maximizing utility subject to income

- e.g. minimizing cost subject to a certain level of output

- We can find the equation of the tangent line using point slope form

- We need to know the slope

- We know